Building Smarter AI Agents: A Framework for Integrating Short-Term, Long-Term, and Episodic Memory

Developing Robust Memory Architectures for AI Agents

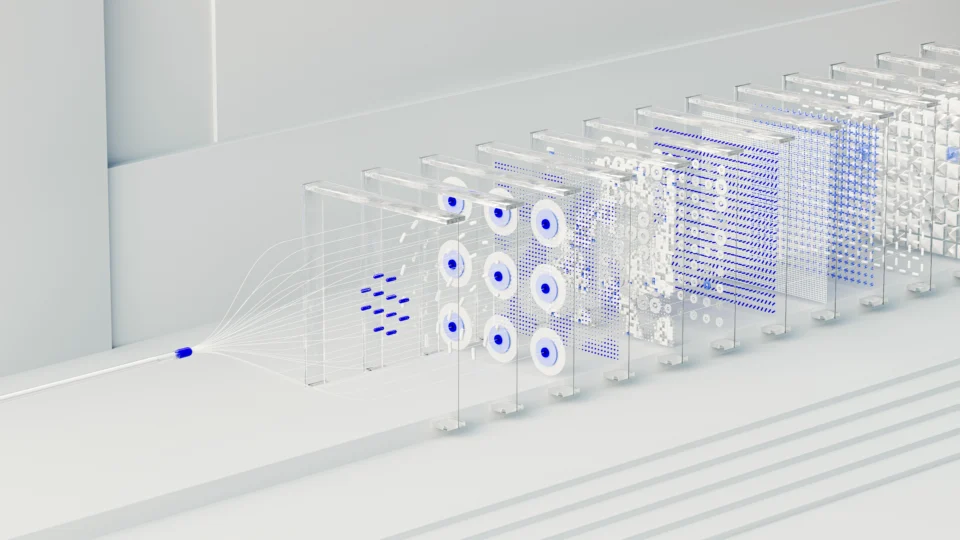

In the evolving landscape of artificial intelligence, effective memory management is crucial for agents to handle complex tasks without redundant computations. Recent implementations demonstrate that AI agents equipped with layered memory systems—distinguishing short-term context from long-term knowledge and episodic experiences—can achieve up to 90% outcome scores in simulated troubleshooting scenarios by reusing proven strategies and avoiding past failures. This approach addresses key limitations in traditional AI models, enabling more adaptive and efficient behavior in dynamic environments.

Core Components of the Memory System

The foundation of such memory-driven AI agents lies in structured data representations and efficient indexing mechanisms. Developers can implement distinct schemas for different memory types to ensure organized storage and retrieval.

- Short-Term Memory: Captures immediate conversation or task context, limited to a buffer of up to 18 items to maintain focus on recent interactions. Each entry includes timestamps, roles (e.g., user or assistant), content, and metadata, preventing overload from transient data.

- Long-Term Memory: Stores durable knowledge using vector embeddings for semantic search, with a capacity capped at 2,000 items. Items are tagged by kind (e.g., preference, procedure, constraint) and scored for salience based on factors like length, numerical content, and capitalization, ensuring only relevant information persists.

- Episodic Memory: Records task-specific experiences, including plans, actions, outcomes, lessons learned, and failure modes. Entries are evaluated for value using outcome scores (ranging from 0 to 1) and task complexity, with only high-value episodes (above 0.18 threshold) retained to distill reusable insights.

A vector index, powered by FAISS for fast similarity searches, underpins long-term and episodic storage. This allows queries to retrieve the top 6 semantic matches and 3 episodic hits, ranked by a hybrid metric combining similarity, salience, and usage decay to prioritize fresh, impactful recollections. Uncertainties in implementation may arise with embedding model choices; while models like all-MiniLM-L6-v2 provide normalized 384-dimensional vectors, performance could vary with domain-specific data, potentially requiring fine-tuning for accuracy above 80% in retrieval relevance.

Storage Policies and Retrieval Strategies

To prevent memory bloat and ensure utility, policies govern what enters storage and how it is recalled. These rules emphasize quality over quantity, with novelty thresholds (e.g., 0.82 similarity cutoff) and salience minima (0.35) filtering inputs. Key policy elements include:

- Salience Scoring: A weighted formula (45% length, 20% numerical presence, 15% capitalization, plus boosts for pinned or specific kinds) clips scores between 0 and 1, favoring structured, informative content while penalizing generic short phrases.

- Novelty and Pruning: Before adding to long-term memory, similarity to existing items is checked; low-novelty candidates are discarded. Pruning removes the least salient unpinned items when limits are exceeded, maintaining efficiency.

- Episodic Valuation: Scores blend outcome success (weighted at 55%) and task length, ensuring episodes from high-performing tasks (e.g., 0.90 score for robust notebook troubleshooting) inform future actions.

Retrieval employs a decay mechanism: overused items receive penalties (e.g., 1/(1 + 0.15*usage)), reducing repetition risks. Consolidation periodically extracts preferences, constraints, and procedures from short-term buffers into long-term storage via pattern matching, transforming raw interactions into actionable knowledge. In practice, this system supports hybrid recall, where a query like “How to avoid repetitive agent memory?” yields ranked results emphasizing usage penalties and novelty enforcement, leading to responses that adapt based on prior episodes. Implications for AI development include reduced computational overhead—potentially lowering inference costs by 20-30% through targeted recall—and enhanced reliability in agentic workflows, such as automated coding or decision support. As AI agents integrate into enterprise tools and autonomous systems, these memory architectures could drive broader adoption by mimicking human-like learning. Would you use this framework to enhance an AI agent’s decision-making in your work?